This is an update on a previous post on the technical infrastructure I run at home. This post will be similarly structured to its predecessor. I will start with giving a detailed description of the base network, continue with my storage setup and finish with compute.

Tier I: Physical layout & network

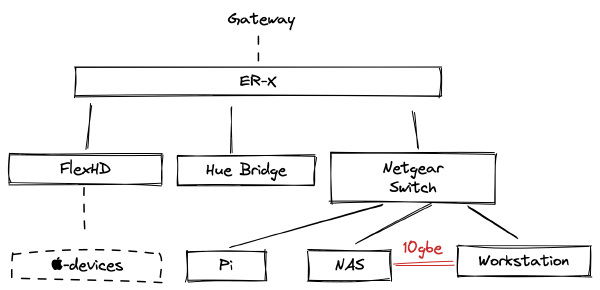

Router: Two years after my initial post I’m still happily using my EdgeRouter-X. It works fine for my needs, although I’m probably gonna switch to a self-built router at some point due to upgrading to 10G/25G Ethernet. Running 10G at home was quite pricey just a couple years ago, but these days you can find older 10GBit cards like the Mellanox ConnectX-3 cards for less than 50€.

Wireless AP: In the last post I talked about the old travel-size Wi-Fi AP I was using. Since then, I’ve switched to using a Ubiquiti FlexHD allowing me to get up to 1.7 Gbps throughput between devices on Wi-Fi. Of course, devices are throttled to 1 Gbps due to Ethernet when accessing wire-bound devices or the internet gateway.

Switching: I am still running some super low-cost low-energy 5-Port Netgear switch (GS105A) I got a couple of years ago for less than 20€.

WAN Access: I don’t get a public IPv4 (and IPv6 doesn’t work at all). I also don’t want to expose anything from my home network publicly on the internet. Luckily, I discovered the Tailscale project some time ago. The utility they provide for free is truly amazing. Thanks to Tailscale, it doesn’t really matter if I’m at home or work remotely, I’m guaranteed to have access to any machine on my home network anyway. This in combination with Wake-On-Lan on the larger machines makes for a pretty efficient compute-on-demand setup I will talk about further in the T3 section.

10GbE P2P: I have a single 10GbE connection running from my main workstation to my storage server. This is mainly to speed things up in case my workstation needs to access large datasets or perform backups. I used some cheap ConnectX-3 EN CX331A NICs I got on Ebay as well as the most basic 10G SFP+ transceivers from FS.com I could get my hands on. I guess I could’ve used a DAC cable as well, but fiber is just far more flexible and fun.

DNS: My Raspberry runs a CoreDNS instance serving as both resolver as well as nameserver for my home network DNS zone. It serves different IPs for the same zone depending which interface requests the information.

For example, when a DNS request digs for the virtm.home.espe.tech entry from the Tailscale link, the CoreDNS server will respond with the Tailscale IP of my storage server.

When the same request comes in from the local LAN port, it will respond with the local subnet IP from the 192.168.0.1/24 range.

You may ask Why CoreDNS? Well, It’s easy to write custom plugins for. This will be useful later when I want to add automatic DNS entry generation for my virtual machines.

Tier II: Storage & media

NAS: Back in 2020 I used a second hand Synology DS213 from Ebay. It did fine most of the time, but sometimes my TimeMachine backups wouldn’t even finish because operations timed out (which was kind of annoying!). At the end of 2020, I replaced my old workstation (a very reliable Intel 3770K) with an Ryzen 5950X-based platform, so the my 3770K is now “busy” serving files. It exposes both AFS and SMB shares.

I migrated all the old mdraid off to a new ZFS-based storage array made out of three 4TB IronWolf RED disks. I always thought that Synology uses some sort of custom RAID system? But no, it’s just a Linux software raid.

Performance wise, I’m pretty happy.

# dd if=/dev/zero of=test1 bs=2G count=1 conv=fsync

2147479552 bytes (2.1 GB, 2.0 GiB) copied, 12.2618 s, 175 MB/s

# dd if=test1 of=/dev/zero bs=2G count=1 (after cache flush)

2147479552 bytes (2.1 GB, 2.0 GiB) copied, 8.44784 s, 254 MB/s

The performance is roughly equal to single disk speeds which is totally fine for me. It’s good enough for backups and the occasional dataset scans.

The storage server keeps

- backups of my other devices

- regularly updated GDPR takeout data from major cloud services like Google and Apple

- mirror of debian testing repository (all of my Linux devices are Debian based, speeds things up quite a bit)

- up-to-date archives of important documentation and services (like Wikipedia)

- some datasets I keep for private project purposes

Earlier this year, my gateway connection got interrupted for almost a week. Having stored offline copies of some useful sites like Wikipedia was a lifesafer during that time.

I also noticed that YouTube is probably the biggest time-waste I use during the day. I deinstalled the app from my phone, which resulted in two things: YouTube is now ad-free thanks to ad-blockers. I watch less vaguely interesting videos.

ZFS allows me to do this without a lot of maintenance. I’ve read that it’s actually NOT recommended to use non-ECC RAM with ZFS due to checksumming, but so far my datasets haven’t been completely ruined because ZFS decides to rewrite them due to checksum mismatch. Although I should probably monitor for this. The block-level compression feature works pretty fine for me too and saves me around 20% of disk space.

Raspberry: The Pi is the only non-(router|switch|home-automation) device that is always on.

It provides AirPlay using shareplay and the HifiBerry DAC platform.

The only real issue I have with this setup is that Windows does not support AirPlay streaming.

The Pi also hosts a Gitea instance for storing some repository mirroring from GitHub as well as throwaway repository storage.

It also provides a simple link shortening service reachable under go/.

Tier III: Compute

I actually got around to build my own little compute platform called virtm. It runs on the aforementioned storage server.

I will probably open-source it in the future, its feature set is quite minimal but for my core use cases it works pretty well.

It exposes both a web-based UI as well as a gRPC API with an accompanying command line client.

It uses libvirt with qemu-kvm to manage virtual machines and is tightly integrated into my networking setup.

Referring back to my old post, I wanted

| Requirement | Status |

|---|---|

| Flexible networking | every VM is reachable locally as well as over Tailscale |

| Low configuration and maintenance overhead | few configuration changes therefore low maintainance |

| Support for multiple tenants for sharing with friends | no, but they can use Valar if it fits their use case |

| Auto-assign DNS records | no, but shouldn’t be a big problem |

| Only-run-what-you-use | solved by wake on lan |

I can create new Debian-based Virtual Machines with just a couple of clicks. After confirming, VirtM generates a new qcow2 image for my VM, customizes it and starts the instance.

It’s a pretty solid user flow so far. Starting, stopping and tearing down VMs works well, too. I’ll look into

- cross-machine private networks

- online migration

- displaying CPU, memory and disk usage in the UI

- easy SSH sessions via web UI or CLI (something along

virtm ssh [machine]) next.

I will post more information on VirtM in the future, so stay tuned and subscribe to my Atom feed.